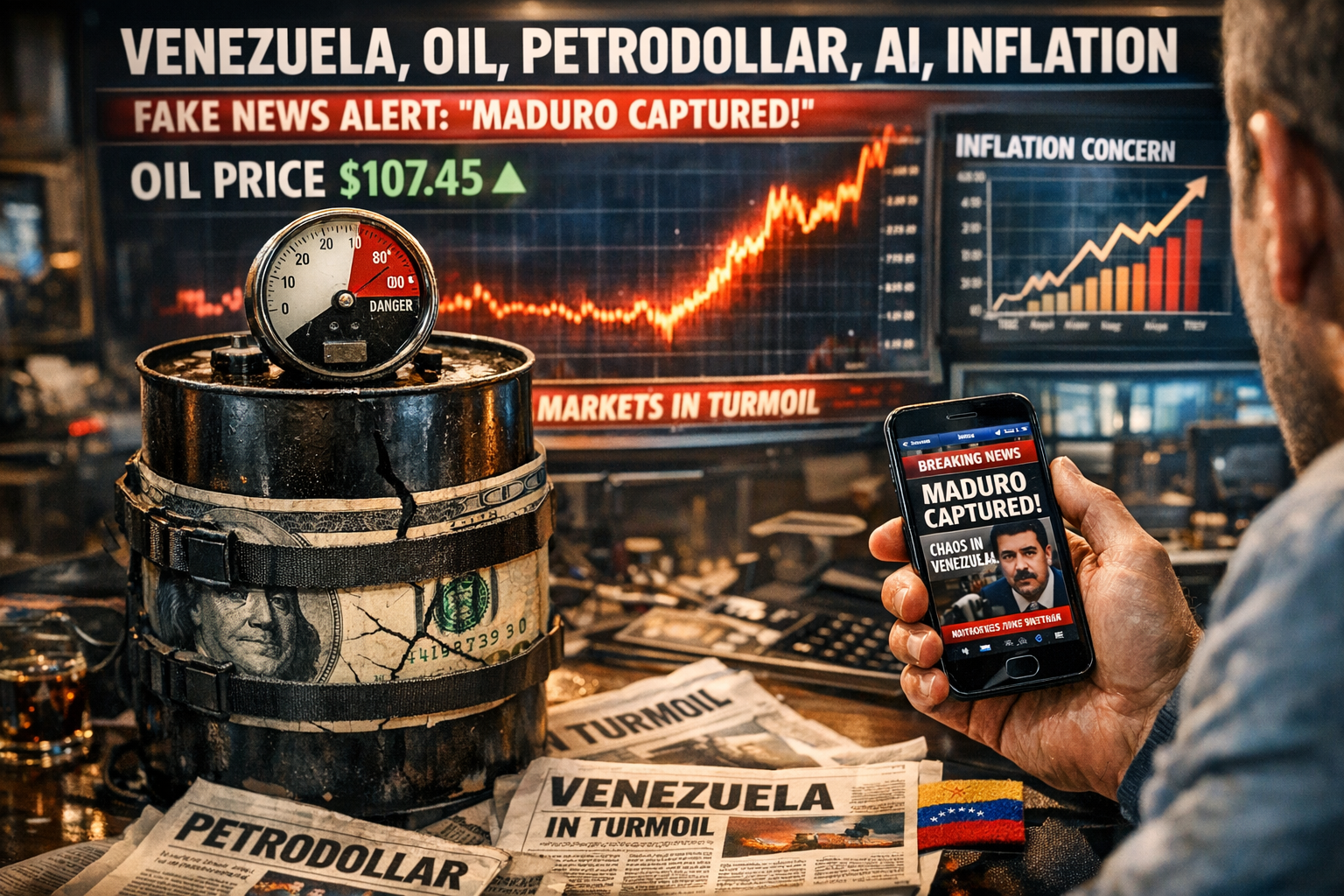

● Starbucks Identity Crash, From Third Place to Convenience Pickup

“Starbucks Today, More Like a Convenience Store than a Café?” Why Brand Identity Dilution is Scarier than Declining Sales

This article covers these topics.

① Why Starbucks shifted from a ‘third space’ to a ‘stop-and-go’ place.

② Structural reasons why the Korean coffee market made Starbucks “ordinary.”

③ The decisive reason why ‘Back to Starbucks’ might struggle to succeed (the environment isn’t what it was 20 years ago).

④ The clash between efficiency (mobile orders/DT) and experience (relationships/spaces).

⑤ An outline of the “real core points” that other news/YouTube channels often miss.

1) Core News Briefing: What does it mean when people say “Starbucks’ identity has become unclear”?

One-line summary

Starbucks used to sell the symbol of ‘luxury/hipness/American culture,’ but now with mixed menus, goods, meals, and pickups, it’s perceived as “an ordinary but not special place.”

Observation Points (Based on the Original Text)

Lately, Starbucks feels more like a “place to rush through” than a “place to stay.”

The balance of coffee, bakery, and goods has diluted the café’s central focus.

Busy stores increasingly feel like convenience stores, worsening sit-in experiences.

2) Starbucks in the 2000s vs. Now: What Has Changed?

2-1. The Past: When a Cup Proved Status/Taste

There were fewer comparables back then.

The Starbucks cup (Siren logo) functioned as a “symbol of premium.”

The brand itself embodied the idea of ‘admiration for America.’

2-2. The Present: Not Premium, but a ‘Standard Value (Ordinary)’

The café competition in Korea is extremely fierce, and local cafés have significantly improved.

The era when international brands automatically succeeded is over.

Consumers no longer automatically acknowledge “foreign brands = better.”

Key takeaway

The brand is now more about “how people perceive it” than its hardware (interior design).

3) The Unique Structure Causing Starbucks in Korea to Become More Like a ‘Convenience Store’

3-1. The Korean Coffee Market’s ‘Rapid Growth Rate’

With mentions of Seoul having over 30,000 cafés, competition is extremely high.

New concepts/flavors/spaces continuously emerge, diluting Starbucks’ “relative specialness.”

3-2. Starbucks’ Real Estate Power Remains, but the ‘Illusion’ has Faded

Starbucks is found in prime locations.

In the past, a Starbucks branch used to be seen as a formula for increasing building values.

But as the brand’s position weakens, doubts arise over whether it still brings the same level of traffic/premium.

3-3. Korean-style Starbucks Locations (Gyeongdong Market, Jangchung, etc.) Boast Excellent Spaces

Specialized Korean stores, absent overseas, receive high marks for their interior/content.

The issue is that “having good stores” and “raising the overall brand perception” are not the same.

4) The Dilemma of Global Starbucks: As Efficiency Increases, Experience Diminishes

4-1. Menu Expansion → Increased Operational Complexity → Longer Wait Times

More menu items mean extended processes, complicating orders/preparation/pickup.

Mobile ordering seems convenient, but walk-in customers face uncertain waiting times, not knowing how many people are ahead.

4-2. DT (Drive-Thru) and Mobile-Centric Operations Favor ‘Sales Optimization,’ But

If customers who wish to sit and experience feel left out, brand value can diminish rapidly.

In essence, maximizing both “efficiency vs. experience” is challenging, and surpassing the optimal balance can lead to identity erosion.

4-3. Facing Competition Both Upwards and Downwards (Sandwiched Position)

Above are more luxurious specialty cafés focused on taste.

Below are more affordable and faster low-cost/high-efficiency coffee options.

Starbucks is expensive, yet maintaining the ‘specialness worth the price’ has become difficult.

In such an environment, when tight monetary policies and consumption slowdowns occur, the “ordinary premium” often takes the first hit.

(Consumers typically cut spending in the ‘interchangeable middle ground’ first.)

5) Why “Back to Starbucks” is Risky: The Environment is Not ‘The Same’ Anymore

The crux is this.

Twenty years ago, Starbucks was a ‘new player’ with weak competition.

Now, Starbucks is a brand that must ‘defend’ itself.

Thus, merely suggesting a return to the old sentiment is insufficient.

Consumer preferences have diversified into specialty/authenticity/experience/speed/delivery, and competitors are already optimized in these areas.

Especially today’s generations are not impressed by “predictable familiarity.”

Familiarity is the baseline, and to become ‘special again,’ it must offer beyond that.

6) The “Most Important Points” Often Missed by Other News/YouTube Outlets

6-1. Starbucks’ Crisis is More About ‘Perception Tipping Point’ than ‘Sales’

Brand perception deteriorates slowly but collapses quickly once a critical threshold is breached.

If framed as “tacky/awkward,” even promotional efforts might backfire.

This isn’t something that can be reversed with short-term solutions; it requires a time-intensive ‘structural improvement’ issue.

6-2. ‘Relational Aspect (Barista-Customer)’ is Hard to Manage Numerically, Thus More Crucial

Starbucks’ resilience originally emerged from “relationships.”

Transitioning to mobile/pickup-focused orders drastically reduces conversations and touchpoints.

Fewer touchpoints lead to a loss of emotional capital, making it tougher to justify price premiums.

6-3. It’s a Matter of ‘How People Feel About the Brand,’ Not ‘What to Add’

Debating whether to increase sandwiches or enhance goods misses the essence.

Consumers must once again have that one sentence in their head: “Starbucks is this brand.”

If this collapses, they’ll end up relying on short-term strategies like pricing/coupons/discounts, which in turn erode the premium aspect further.

7) Viewing Starbucks Issues from an Economic/Industrial Perspective: Ultimately, ‘Branding is a Productivity Game’

The Starbucks case isn’t just about coffee; it’s tied to issues all modern companies face.

As expansion (scale) and efficiency (operational optimization) are pushed, experience/relationships/identity get diluted.

This is a common dilemma for retail companies experiencing reduced “offline presence” during digital transformations.

Amidst ongoing discussions on potential economic downturns and heightened consumer sensitivity,

“Replaceable premiums” may weaken faster than expected.

Conversely, brands that redefine their identity and redesign experiences become stronger even in a recession.

< Summary >

It’s not that Starbucks’ decor has worsened, but the ‘specialness’ in consumer perception has diluted.

With intense café competition in Korea, Starbucks’ relative premium is quickly diminishing.

As efficiency is increased through menu/DT/mobile orders, waiting/relationships/experience dwindles, leading to identity erosion.

‘Back to Starbucks’ is insufficient since it’s not the same environment as 20 years ago; a redefinition is necessary.

The real core points are the perception tipping point, relational capital, and redefining “how people feel about the brand.”

[Related Articles…]

Will Starbucks’ Brand Strategy Change Succeed in the Korean Market?

Branding Beats Sales: Conditions of Strong Brands in Recession

*Source: [ 티타임즈TV ]

– “스타벅스 정체성이 불분명해졌다” (최원석 프로젝트 렌트 대표)

● CEOs Next, AI-Slammed Jobs, Content Shock

If an Einstein-level AI arrives, is there a realm where only humans remain? (And this is where the market truly diverges)

Today’s article contains these core points.

First, why saying “CEOs are not safe” is realistic and how the job landscape is being reshaped.

Second, what the phenomenon of 97% of American consumers being unable to distinguish AI music means for the ‘content market structure’.

Third, where AI first penetrates in art (and where it remains the longest) by neatly dividing it into “commercial art vs. pure art”.

Fourth, the critical structural transition that YouTube/news rarely covers: “The competition in creation shifts from ‘how’ to ‘what’”.

Fifth, why the most dangerous path identified as “AI learning autonomy through art/creation” is controversial.

1) News Briefing: Summarizing Only the ‘Facts’ of This Conversation

[Field Summary]

KAIST neuroscientist Professor Kim Dae-sik and choreographer Kim Hye-yeon, who is actually utilizing AI as a creative partner, discuss “the role of humans in the AI era and the redefinition of art/jobs”.

[Core Point 1 — CEOs Are Replaceable Too]

A concern that AI can automate even CEO roles as it penetrates beyond simple clerical and production jobs into areas of “decision-making, planning, and management”.

[Core Point 2 — ‘Employment Replacement’ Is Already Happening in Creative Fields]

Choreographer example: Describes an experience where AI replaced some roles in music/video/dramaturgy/planning, reducing the number of creators needed for a project from 20 to 5.

In other words, not ‘someday’, but the cost structure is already changing.

[Core Point 3 — Consumers Can’t Distinguish Already]

Mention that in a blind test, 97% of American consumers couldn’t distinguish between human and AI music.

The point is, “human creation’s defensive line could crumble based on quality competition alone”.

[Core Point 4 — AI as the Best ‘Tool’, ‘Autonomy’ is Dangerous]

While it is positive to use AI as a tool, there’s a viewpoint that if AI starts doing “true creation (rejecting existing frameworks + creating new rules)”, it could learn autonomy, which is risky.

[Core Point 5 — When Robotics (Physical AI) Comes, the Meaning of ‘Body’ Changes Again]

With the observation that hands-on jobs (like plumbers) might survive, a more fundamental question is posed: “How much of the human body is necessary when humanoids/robots become a reality?”

2) True Conclusion of This Conversation: Both Art and Jobs Crumble from Areas With “Right Answers”

There is a structurally important sentence here.

“Commercial art has right answers, while pure art doesn’t.”

AI is optimized to produce results that are “fast, cheap, and reliable” close to the right answer.

Thus, substitution happens quicker in areas where right answers exist (=market response/revenue/conversion rates based areas).

Examples of Areas With Right Answers (Fast Substitution)

Advertising music, background music, short-form videos, brand design options, YouTube thumbnails/copy, and genre works with ‘well-selling’ formulas.

Here, productivity is directly linked to cost savings, and companies move based on ROI.

Examples of Areas Without Right Answers (Slow Substitution)

Works questioning “who am I”, projects where discomfort and inefficiency themselves are meaningful, works that ‘shake’ the audience rather than ‘understand’ them.

That is, areas where the process/narrative/existential questions are more important than the end product.

3) Economic Perspective: Why Companies Push AI Creation—Not for ‘Emotion’, But for ‘Risk/Cost/Predictability’

There is one subtly important point in this talk.

Professor Kim Dae-sik’s perspective sounds like a tech story but is actually about corporate financial logic.

How AI Actors/AI Creations Become a “Heaven-sent Opportunity” for Companies

In industries where labor costs form a large portion of production costs (film/TV/advertising), cost volatility is high.

Moreover, including variables like scandals, conditions, and contracts, “project risk” increases.

AI, once technically feasible, offers companies overwhelming predictability and cost control.

This is the intersection of productivity innovation, cost savings, and digital transformation often mentioned in today’s global economy.

Ultimately, companies prioritize “a more stable revenue structure” over “a more moving piece of art”.

4) The ‘Most Important Content’ That YouTube/News Rarely Addresses (My Perspective)

1) Creation Competition Shifts from “How It Was Made” to “What Was Chosen”

Moving forward, instead of ‘performance ability/composing skills/editing techniques’ becoming rare,

the core competitive factor is more likely to be“What emotional piece to throw, at what timing, to which audience, and in what context”.

Thus, rather than production capability, ‘curation+meaning assignment+situation design’ becomes the upper layer.

2) “Human Creation” Will Have ‘Story/Process’ As Its Premium, Not Quality

If 97% of American consumers can’t distinguish, markets differentiating by quality are already moving to an ending direction.

What’s left is the narrative value, such as “why was this piece created” and “who made it with what life experiences”.

Therefore, labels/certifications like ‘Made by Human’ could potentially evolve into actual market mechanisms.

3) AI Adoption Comes More as ‘Team Structure Reshuffle’ Than ‘Employment Cut’

The choreographer’s example of reducing the team from 20 to 5 is a shocking point,

but what’s more likely to occur is not “firing people” but “not hiring for that role from the start”.

That is, as new recruitments dry up, the industrial structure changes.

4) Demand for ‘Pure Art’ Might Actually Grow (Paradoxical Growth Sector)

As AI reduces repetitive labor, people ask “Who am I” more frequently.

It’s symbolic that retired executives often first pose the question “Who was I?”.

This means that in the content market, demand based on “existence/identity/senses” could increase,

and if well-designed, it could become a new growth industry.

5) The Real Risk Is Not ‘AI Doing Art’, But ‘AI Learning Autonomy Through Art’

Autonomous AI risk is usually imagined only in military/hacking/research labs,

but here arises the viewpoint that “creation is training for rejecting existing rules and creating new ones”.

This is likely to be a growing topic in policy/regulation debates moving forward.

5) Practical Application: In the AI Era, Think of a Safe ‘Skill Portfolio’ Instead of ‘Safe Jobs’

The message of this conversation is singular.

Instead of seeking safety in a “job”, have a skill combination that works in any environment.

(1) Sensory-Based Observational Skill

The point emphasized by the choreographer: humans interpret the world based on sensory organs, not data input.

Enhancing how to see/hear/feel ‘in a more detailed way’ becomes a unique human strength.

(2) Problem-Definition Ability (Asking Questions Without Right Answers)

Highlighted as a commonality of science and art.

While people solving known answers can be caught up by AI, “what questions to ask” remains a core human weapon.

(3) Meaning Design (Context/Timing/Audience)

Future creation is likely to be closer to “editorial meaning design” rather than generating end products.

This skill connects directly to marketing, branding, planning, and product strategy in companies.

(4) Creative Workflow Utilizing AI as a ‘Partner’

In creative fields, labor cost structures are already changing.

An era where individuals work like teams is unfolding.

This leads to personal productivity innovation, fundamentally altering career leverage.

6) Reinterpreting with Investment/Industry Trends: “AI+Content+Robotics” Moves as a Single Cluster

The video is intriguing because it doesn’t just discuss AI (software),

but extends to physical AI (robotics), prompting a reevaluation of “human body” at an industrial level.

The market is likely to move in approximately this cluster.

Enhancement of AI models → Automatic content creation → Restructuring of distribution platforms → Strengthened copyright/labeling regulations → Proliferation of humanoids → Labor market restructuring → Explosion of education/training markets.

This trend is hard for companies to stop even if there’s global economic slowdown.

It’s because AI directly relates to cost reduction and productivity innovation, and when a competitor adopts it, there’s a structural necessity to follow suit.

(In an inflation environment, technology that “lowers cost prices” continues to be invested in.)

< Summary >

AI rapidly replaces areas with right answers (commercial art·planning·management), and the employment structure is already changing in actual creative fields.

In an era where consumers can’t distinguish AI creations, the premium of human creation becomes ‘story/process/meaning’ rather than ‘quality’.

Key competition transitions from “how to create” to “what, when, and to whom to present”.

The main risk is that AI could open a path to learning ‘autonomy’ through creation.

[Related Articles…]

- AI Regulations and Corporate Strategy, Key Points for 2026

- Humanoid Robot Commercialization and Its Impact on the Industrial Map

*Source: [ 지식인사이드 ]

– 아인슈타인급 AI 나와도 흉내낼 수 없는 인간만의 영역ㅣ지식인초대석 EP.91 (김대식 교수, 김혜연 안무가)

● Unlimited Context Disruption, RLM Shatters 1M Token Hype, Enterprise AI Costs Crash

“One Million Tokens in Context Window” Wasn’t the Answer, and the True Meaning of ‘Unlimited Context’ Opened by RLM (Recursive Language Models)

Today’s article contains just 4 core points. First, why AI quietly deteriorates when longer prompts are provided (context rot). Second, how MIT and Prime Intellect’s RLM can handle millions to tens of millions of tokens without needing “larger models.” Third, data points that show actual cost reduction and accuracy improvement in benchmarks. Fourth, how this structure opens up new markets (enterprise, agent, data infrastructure) from an economic and industrial perspective.

1) News Briefing: Why the “Longer Context = Correct Answer” Myth Was Broken

In recent years, the AI industry has been competitively increasing the context window from 8K → 32K → 100K → 1M tokens. But in real use, it wasn’t that “longer inputs make it smarter,” rather performance declines and costs soar have been repeated problems. Researchers have increasingly blatantly started calling this phenomenon context rot.

The key takeaway is this. Simply “cramming in all inputs” doesn’t help the model’s inference; rather, it tends to choke the model’s working memory. Especially for problems requiring to ‘read’ the entire input and ‘calculate relationships’ (linear/quadratic complexity), the performance collapses rapidly as length increases.

Why this is important is because enterprise data (logs, contracts, policy documents, code bases, knowledge bases) are generally long, noisy, and require relational questioning. In other words, if “long context” isn’t the solution, the ROI calculation for enterprise AI itself needs to be restructured. This point directly connects to AI investment strategies, productivity, and the flow of digital transformation.

2) The Nature of the Problem: Context Rot Arises from the Pretense of “Reading Everything at Once”

The core point from the original text is this. Simple retrieval tasks (finding specific sentences in large documents) can somewhat withstand increased length. However, the moment there is a need to “synthesize multiple parts” or “calculate relationships between all items,” the precision of long contexts collapses quickly.

MIT’s analysis shows that as input length increases (from thousands to hundreds of thousands of tokens), the F1 score in specific tasks converges to the bottom. The important point is, even “before reaching the hard limit (context cap),” the performance has already collapsed. Thus, the problem isn’t simply due to ‘lack of tokens,’ but arises because “the inference process is tied to the memory/attention structure.”

3) Solution Approach: RLM Is Not “AI That Consumes Prompts” but “AI That Searches for Information”

The perspective shift of RLM (Recursive Language Models) is simple. Instead of cramming large inputs into the model’s head, leave the inputs in an external environment (workspace) and let the model access only what is needed.

The analogy can be neatly stated as follows. Before: AI memorizes an entire book and takes an exam. RLM: The book is placed on the desk, and the AI reads and summarizes only the needed pages while solving the test questions.

Typically, the structure operates like this.

- The main model only coordinates. It decides what to view, what to search for, and which pieces to summarize.

- The full input (millions to tens of millions of tokens) is stored in the external workspace. The main model performs “lookup/search/excerpt” only for the required parts.

- Helper models (smaller and cheaper models) handle partitioned tasks. The main model doesn’t directly handle large text blocks; it outsources summarization, verification, and partial computations.

The reason why “recursive” is attached here is also important. Whenever needed, tasks are split again, called again, and verified in a step-by-step manner to build up inference. Ultimately, AI inference shifts from being “memory (context)-centric” to “exploration-centric.”

4) Benchmark Results: It’s Quite Clear Where Accuracy Rises and Costs Fall

I’ll summarize the impressive numbers cited from the original text in context only (detailed figures may vary based on experimental conditions).

- In situations like simultaneous input of hundreds to thousands of documents (millions of words), which “normal LLMs can’t fully read in the first place,” a combination of RLM and superior models shows 90%-plus accuracy, with query costs lower than the existing “throw everything in and read” method.

- In Code QA (LongBench V2 series), accuracy improves in order—from base model alone, to summary agent, to RLM structure. Notably, a variation (ablation) shows significant improvement simply by “offloading context from the model.”

- In Quadratic aggregation tasks like ulong pairs, general models have F1 scores deteriorate to almost zero, but RLM raises it to meaningful levels. Hence, the structural advantage is greater not in “reading long documents” but in “calculating relationships.”

Economically, why this is significant is this. Enterprises are more about “synthesizing multiple systems/documents/logs for decision-making” than merely “reading long documents,” which falls into the area of quadratic difficulty. If cost-performance is improved here, the total ownership cost (TCO) curve of AI adoption changes itself.

5) Prime Intellect’s Implementation Points: ‘RLMNV’ Is More Practical from an Operational Standpoint

Prime Intellect presents an approach (RLMNV) that refines MIT’s ideas into a more operationally feasible system. The details here are crucial.

- Input for the main model is “neatly restricted.” Large texts like web browsing results or tool outputs are not poured directly into the main model. The main model focuses on thinking (planning/decision-making), while exploration (search/collection) is handled by helpers.

- Exploration costs are reduced through mechanisms like batch execution (simultaneously processing several small tasks). This aspect is especially important from a productivity standpoint. If done “sequentially step-by-step,” costs and delays spike, but batching reduces that bottleneck.

- Strong rules for final answer composition (completion flag). It enforces writing results to a specific location and termination, preventing the system from wandering endlessly.

To summarize, RLM isn’t just a paper idea but a design that can lead to “agent/workflow productization.”

6) “Character Differences” Among Models: Even with the Same Structure, Results Diverge

An intriguing part from the original text is this. Even when given the same RLM prompt/structure, some models explore cautiously, while others excessively split tasks and abuse calls. It was noted that adding just a line, “Don’t excessively call helpers,” changed the behavior.

This implies that future competitiveness lies not only in “model size,” but also in

- how well one sets exploration strategies

- how accurately one determines when to stop (early termination)

- how efficiently one performs validations

This shift is towards the same “operational intelligence,” which is particularly important for enterprise AI. Ultimately, costs arise from call frequency and latency, after all.

7) The “Truly Important Line” That Other News/YouTube Often Miss

Everyone’s focusing on the sensational part of “unlimited context,” but the deeper core point is this.

The key takeaway of RLM is not the ‘number of tokens,’ but that it ‘redesigns the costs and reliability curves simultaneously by switching the inference structure from a memory base to an exploration base.’

The reason why this is crucial is that the competition for long context ultimately collides head-on with infrastructure costs (training/inference computation power). In contrast, RLM enhances performance by “altering the structure (at the inference stage),” therefore there’s plenty of room to boost productivity even without overhauling the model architecture. If this direction grows, the market’s center of gravity shifts from

- more large-scale modeling competition

- competition in systems involving model + workspace + tooling + policies (interruption/verification/batching)

This point has the potential to alter the AI market’s value chain (who makes the money). It’s likely to grow together with sectors like cloud, data infrastructure, agent platforms, and security/governance.

8) Economic & Industrial Outlook: 3 ‘Realistic’ Applications RLM May Open Up

If this structure spreads, areas other than simple chatbots would first become lucrative. (From here, it’s about “new product lines.”)

- Large-scale codebase QA/refactoring agent It becomes feasible to create answers grounded in finding only “relevant files” within millions of lines of code.

- Enterprise knowledge base (policies/contracts/emails/minutes) exploration-type analysis Instead of dumping entire enterprise data, standardizing a search/extraction/verification loop may be cheaper and more accurate.

- Long-term log/security event analysis As the period lengthens, ‘dumping everything’ becomes impossible, but RLM excels in drilling into necessary segments to calculate correlations.

In conclusion, on a macro level, the AI market is likely to shift its weight from “model performance” to “AI system design/operational efficiency.” This flow drives productivity, digital transformation, and AI investment strategy.

< Summary >

Increasing the context window indiscriminately results in context rot where performance collapses with long inputs. RLM leaves inputs in an external workspace, allowing the model to search, extract, and summarize only the needed information for step-by-step inference. In benchmarks, RLM has shown examples of raising accuracy and lowering costs in long/relational tasks. Going forward, competitiveness is likely to shift to AI system operation design, like “exploration strategies, interruption policies, batch processing,” rather than “larger models.”

[Related Articles…]

- AI Investment Strategies and Productivity Innovation: 2026 Corporate Adoption Roadmap

- Inflation Trends and Interest Rate Outlook: Global Economic Cycle Checkpoints

*Source: [ AI Revolution ]

– New AI Reasoning System Shocks Researchers: Unlimited Context Window